“As agents take on more responsibilities, observability is key to ensuring they operate safely, provide value, and stay aligned with user and business objectives.” – Michael Gerstenhaber, VP of Product at Anthropic.

With AI integration accelerating across industries and organizations embedding AI more deeply into their workflows, one thing is clear: the reliability of any AI system is only as strong as the quality of the information it receives. The phrase “garbage in, garbage out” has never been more relevant!

Whether it’s a generative model drafting marketing copy or an intelligent assistant summarizing legal documents, the quality of AI inputs — data, prompts, and contextual signals — directly determines the reliability of the output. However, monitoring and managing these inputs at scale is a complex task. Large language models (LLMs) rely on probabilistic reasoning, making it challenging to predict or audit how a single piece of data or a specific prompt influences their behavior.

That’s where LLM Observability steps in to bridge the gap. Just as traditional observability ensures that software systems are stable and traceable, AI and LLM observability help businesses track, understand, and improve the way inputs are processed and interpreted by generative models.

For enterprises adopting AI integration services or scaling AI business integration, LLM Observability act as the link between quality assurance and accountability, ensuring that the AI systems produce trustworthy, explainable results.

Key Takeaways

- LLM observability provides the critical bridge between quality assurance and accountability, helping enterprises scale AI with confidence by ensuring outputs remain trustworthy, explainable, and consistent.

- It is essential for effective data integration, ensuring that every stage—from incoming data pipelines to prompt construction and output validation—remains transparent, consistent, and reliable.

- Challenges such as model drift, data shifts, and hallucinations can be addressed through LLM observability, enabling enterprises to continuously monitor model behavior and ensure outputs remain accurate, stable, and trustworthy.

- A strong LLM observability framework is built on well-defined metrics, continuous monitoring, automated alerts, and iterative optimization—ensuring transparency and accountability throughout the AI lifecycle.

Understanding LLM Observability

LLM Observability involves tracking, analyzing, and improving how large language models interact with their inputs, from data ingestion and prompt handling to response generation and evaluation.

In traditional systems, observability revolves around metrics, logs, and traces. For AI integrations, it extends further to capture how an LLM interprets instructions, which data sources it references, and why it generates specific outputs.

In other words, an effective LLM Observability setup gives teams a peek into AI reasoning, helping them detect biases, monitor drift, and ensure consistency in model performance.

How LLM Observability and AI Integration Work Together

Data consistency and system reliability are essential to every successful AI integration, and LLM Observability strengthens both.

In a typical enterprise setup, multiple layers interact:

- AI data integration pipelines feed structured and unstructured data into the model.

- Prompt management systems control how queries and instructions are formatted.

- Response validation systems check outputs for accuracy and compliance.

LLM Observability spans these layers, providing end-to-end visibility and enabling businesses to maintain a continuous feedback loop that links input quality, system accountability, and output reliability.

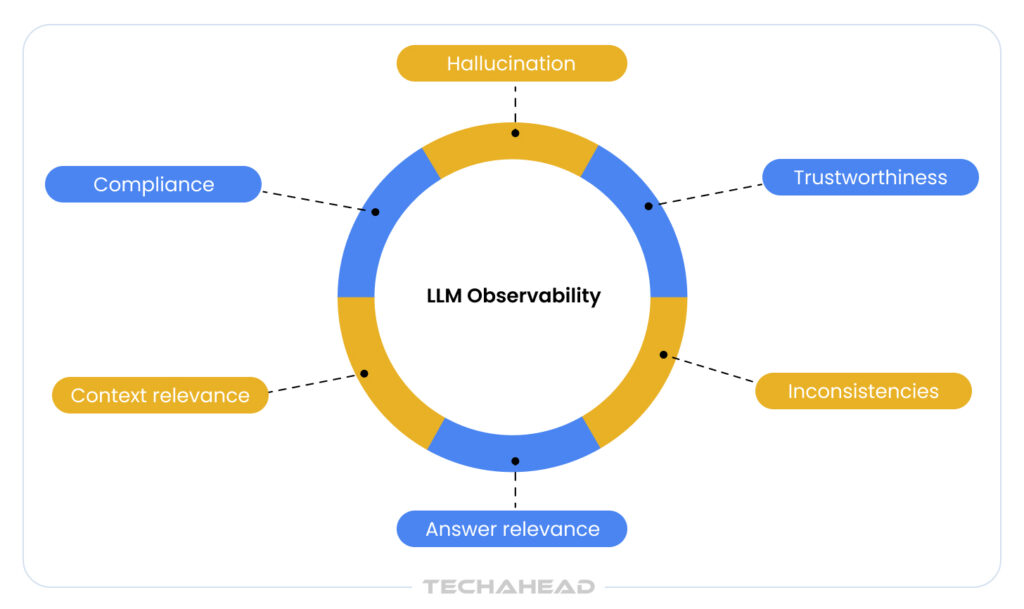

Common Challenges of LLMs

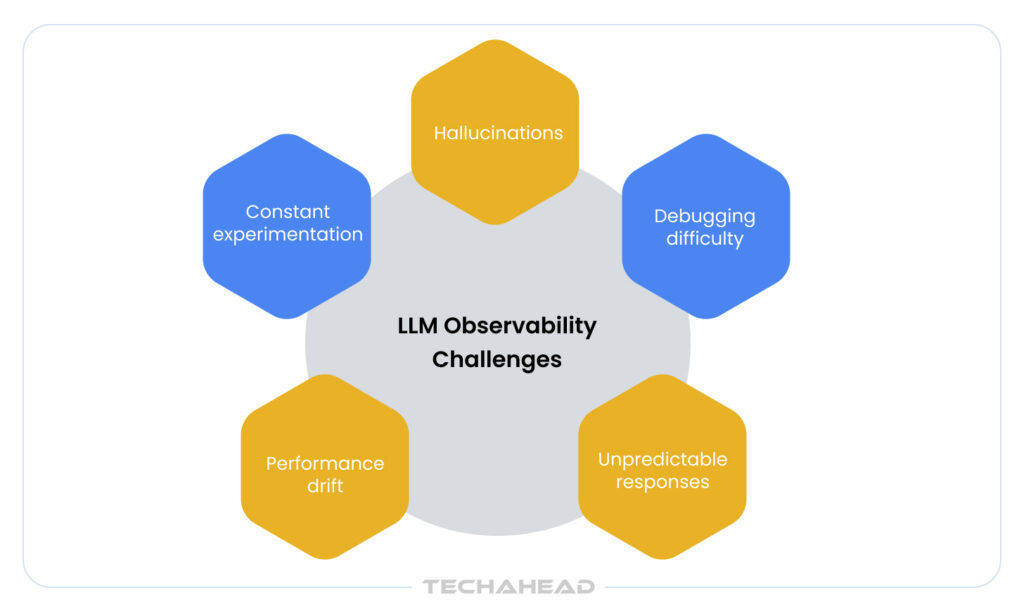

Large Language Model (LLM) applications are inherently dynamic, complex, and unpredictable. They operate across multiple components—retrievers, APIs, prompts, vector databases, and model layers—making it difficult to understand fully how or why a specific output is generated. Among the common challenges are:

1. The Need for Constant Experimentation

Real-world use of LLM often demands ongoing experimentation—A/B testing different model versions, modifying prompt templates, or switching to new retrieval mechanisms. Even after deployment, teams need to compare model outputs under varied configurations or contexts to determine which combination yields optimal results.

2. Difficult to Debug

LLM-driven workflows involve multiple moving parts—retrievers, embedding models, vector databases, APIs, and orchestration frameworks. As these components scale, identifying the root cause of a performance drop or hallucination becomes increasingly complex.

3. Infinite Possibilities of Responses

LLM applications operate in an open-ended environment where real users will always ask unexpected questions. This unpredictability can lead to unforeseen behaviors that standard evaluation datasets fail to detect.

4. Performance Drift Over Time

In LLMs, the same input may yield different outputs on separate occasions. Moreover, third-party providers frequently update their models, leading to shifts in response style, accuracy, or reliability. Such model drift can degrade user experience and compromise business outcomes.

5. Hallucinations and Factual Inaccuracies

The most critical issue in generative AI is hallucination—when LLMs confidently generate false or misleading information. These errors are particularly risky in domains such as finance, healthcare, and law, where factual accuracy is non-negotiable.

LLM Observability provides a structured way to trace, evaluate, and continuously refine these systems, ensuring that AI integration efforts remain reliable, scalable, and accountable.

The Benefits of LLM Observability

Ensures Input Quality

Every LLM depends on structured, context-rich inputs. If the AI data integration layer feeds incomplete or biased data, even the most sophisticated model will generate flawed outcomes. Observability tools help detect anomalies, inconsistencies, and data drift, allowing teams to maintain clean, contextual inputs.

Reinforces Accountability

In a world where AI decisions influence business functions, such as hiring, lending, and healthcare recommendations, accountability is non-negotiable. LLM observability enables tracing each output back to its input, revealing why a model responded a certain way.

This transparency between AI and LLM Observability is critical for compliance, audit readiness, and ethical integration of AI into business operations.

Enables Continuous Optimization

Observability systems use feedback loops to capture which prompts yield optimal results and which cause confusion or errors. Businesses can use this data to refine their inputs, thereby improving the quality and consistency of LLM-driven workflows.

Builds Organizational Trust

The confidence in automation increases when internal teams and customers know that AI outputs can be monitored and explained. This responsible governance builds trust in the Generative AI integration process.

Protects Brand and Ethical Integrity

By flagging low-quality inputs or inappropriate model behavior early, observability prevents brand-damaging outputs — from factual inaccuracies to biased content.

Core Elements of an Effective LLM Observability Framework

Building a robust LLM observability framework requires combining data monitoring, model evaluation, and human oversight to ensure AI systems remain transparent, reliable, and aligned with business goals. An effective LLM Observability framework comprises:

Response Monitoring

At the foundation of LLM observability lies response monitoring — the process of tracking user queries and LLM responses in real time. It enables teams to assess how models behave under various contexts and workloads. Effective monitoring captures essential metrics —such as completion time, token usage, and processing costs—offering visibility into both performance and efficiency.

Automated Evaluations

Automated evaluations enhance scalability by assessing model responses in real time without relying solely on human intervention. These systems track performance metrics, conduct automated quality checks, and help detect potential hallucinations or factual inconsistencies before they reach users.

Advanced Filtering

With vast volumes of AI-generated data, advanced filtering mechanisms are essential for effectively managing and refining outputs. This includes filtering failing or undesirable responses based on specific criteria, such as user IDs, conversation threads, or hyperparameter configurations used in A/B testing.

Application Tracing

Application tracing provides end-to-end visibility across the entire request lifecycle—from user input to LLM response generation. By monitoring performance at the component level, organizations can identify bottlenecks, analyze integration points, and understand how different systems interact within the AI ecosystem.

Human-in-the-Loop Integration

Even with automation and metrics-driven evaluation, human oversight remains essential to ensure accountability and context-driven assessment. A strong observability framework includes human-in-the-loop (HITL) integration, where experts can review flagged outputs, validate responses, and provide structured feedback. This feedback is then integrated into evaluation systems to refine model performance continuously.

Developing an Actionable Framework for Implementing LLM Observability

Building a robust LLM observability ecosystem requires more than just tools—it demands a structured process that embeds transparency and accountability into every stage of your AI lifecycle. Here’s how to go about it systematically:

1. Define Key Metrics

Start by identifying the metrics that best reflect the health, accuracy, and efficiency of your LLM. These might include latency, error rates, throughput, or model drift.

2. Integrate Observability Tools

Select and embed the right observability tools into your pipeline. For instance, solutions such as Prometheus, Grafana, and Last9 can collect, store, and visualize performance data in real time. They can be complemented by LLM-specific platforms such as Traceloop or Langfuse to trace prompts, model versions, and token usage.

3. Set Thresholds and Alerts

Define acceptable performance thresholds for your chosen metrics and configure automated alerts when they are breached. This enables proactive intervention before minor issues escalate into major user-impacting failures.

4. Monitor and Analyze Continuously

Observability is most effective when it operates in real time. It uses distributed tracing to follow a single user request through every layer—from API call to model response—and logs to pinpoint where bottlenecks or anomalies occur.

5. Iterate and Optimize

Observability isn’t static—it evolves as your AI use cases and user behaviors change. Regularly review collected data, update evaluation metrics, and refine model configurations to improve stability and output quality.

Which LLM Observability Tools to Choose

A growing ecosystem of tools now empowers developers and enterprises to monitor, debug, and optimize their LLMs effectively. These include:

- Langfuse (Open Source + Hosted)

Best for: Full-stack LangChain / LangGraph users.

Langfuse stands out for its deep tracing capabilities, automatic prompt and LLM tracking, and intuitive UI. It integrates seamlessly with Python and JS SDKs, making it a natural choice for LangChain-based setups. Its disadvantage is that it’s less plug-and-play outside the LangChain ecosystem. Still, it remains one of the most comprehensive options for developers seeking low-friction observability.

- WhyLabs + LangKit

Best for: ML-first teams focused on data health and fairness.

WhyLabs and LangKit work together to provide automated data monitoring, embedding drift detection, bias tracking, and prompt quality metrics. Their statistical testing for input/output distributions makes them particularly valuable for teams committed to ethical AI and compliance. On the downside, the UX may feel technical for non-data scientists. Yet, these tools excel at maintaining transparency and data integrity in production environments.

- Arize AI

Best for: Teams deploying fine-tuned models at scale.

Arize AI offers advanced tracking of embeddings, drift, intent clustering, and real-time feedback loops—capabilities essential for scaling AI in production. Although it is not specifically designed for LangChain, Arize is a top choice for organizations running high-volume custom LLMs.

- PromptLayer

Best for: Lightweight prompt testing and auditing.

PromptLayer focuses on simplicity and speed. It tracks prompts, completions, latency, and versions—perfect for teams experimenting with prompt variations or auditing model responses. While it lacks advanced feedback and evaluation workflows, it’s an excellent entry point for teams seeking quick visibility into prompt performance.

- Humanloop

Best for: RAG and fine-tuning pipelines.

Humanloop bridges human feedback and machine learning by enabling human-in-the-loop annotation, experiment tracking, and dataset management. It simplifies evaluation cycles for RAG systems and reward models, enabling teams to refine model behavior efficiently. Though its ecosystem is smaller compared to Langfuse or Arize, its hands-on feedback workflows make it invaluable for teams focused on continuous model improvement.

- LangSmith (by LangChain)

Best for: Developers building complex multi-step LLM agents.

LangSmith delivers complete observability for LangChain applications, tracking everything from inputs and outputs to intermediate steps, tool usage, and memory chains. While its utility is limited outside LangChain, it’s the go-to platform for teams building sophisticated LLM agents who need full visibility from development to deployment.

Choose an LLM observability tool that aligns with your AI workflow—LangChain users thrive with Langfuse or LangSmith, data-driven teams with WhyLabs, and scaling enterprises with Arize or Humanloop.

Conclusion: How LLM Observability Links Quality and Accountability

AI integration delivers intelligence at scale, but without transparency, it’s impossible to ensure consistency or trust. LLM Observability serves as the essential bridge between input quality and output accountability.

By embedding observability into every stage of AI business integration, organizations can ensure their systems are reliable, transparent, and continuously improving.

With years of experience in AI implementation and integration, TechAhead has the expertise to help enterprises take control of their AI initiatives through advanced LLM observability, turning complex models into high-performing, actionable assets. Contact us to get started.

LLM Observability tracks how large language models process data and prompts, offering visibility into performance, bias, and reasoning. It ensures both input quality and accountability in AI integrations, while helping organizations identify issues early, optimize outputs, and build greater trust in AI-driven decision-making.

It improves reliability, ensures transparency, and supports governance — all critical to successful AI business integration. By offering clear insights into model behavior and performance, LLM observability helps businesses make data-driven decisions, accelerate innovation, and maintain compliance with evolving AI regulations.

Yes. LLM observability is equally valuable for small and mid-sized companies, helping them monitor performance, detect errors early, and optimize costs. It enables growing businesses to scale AI responsibly, ensuring consistent quality, transparency, and trust as they expand their digital capabilities.

Yes. By tracing data lineage and evaluating model behavior, observability can detect and mitigate bias introduced through poor AI data integration or unbalanced prompts. It also enables continuous auditing and refinement of model outputs, helping organizations uphold fairness, inclusivity, and ethical standards across their AI systems.

‘Black box’ refers to the opaque nature of large language models (LLMs), as the reasoning behind their outputs is hidden or difficult to interpret. Observability helps open this black box by providing insights into how the model processes data, makes predictions, and responds to different inputs, enabling greater transparency, accountability, and control over AI behavior.